The importance of adeptly managing data in our increasingly digital world cannot be overstated in a world where data has been aptly called the ‘new oil’. A byte of data, whether it’s from an e-commerce platform or sensor outputs in a manufacturing facility, can yield insights, optimize processes, and drive innovation.

Yet, raw data, in its unprocessed form, is often chaotic and unyielding. This is where data engineering comes into play, serving as the cornerstone for transforming vast data lakes into structured, usable information. At the heart of effective data engineering lie the best data engineering tools.

These tools, varied in their functionalities and designs, cater to different stages of the data lifecycle. They assist in tasks ranging from data collection and storage to transformation and integration. For any data-driven organization, selecting the best tools becomes a strategic imperative as data volumes continue to rise.

Quick List of 5 Best Data Engineering Tools

Here is our quick list to give you an overview of our recommended tools —

1. Amazon Redshift: A fully-managed data warehouse solution, Redshift offers lightning-fast query performance through its columnar storage technology, making it the go-to for businesses looking to scale seamlessly with massive datasets.

2. Segment: Dubbed as the ultimate platform for customer data infrastructure, Segment allows businesses to integrate, collect, and harness their data effortlessly from multiple sources.

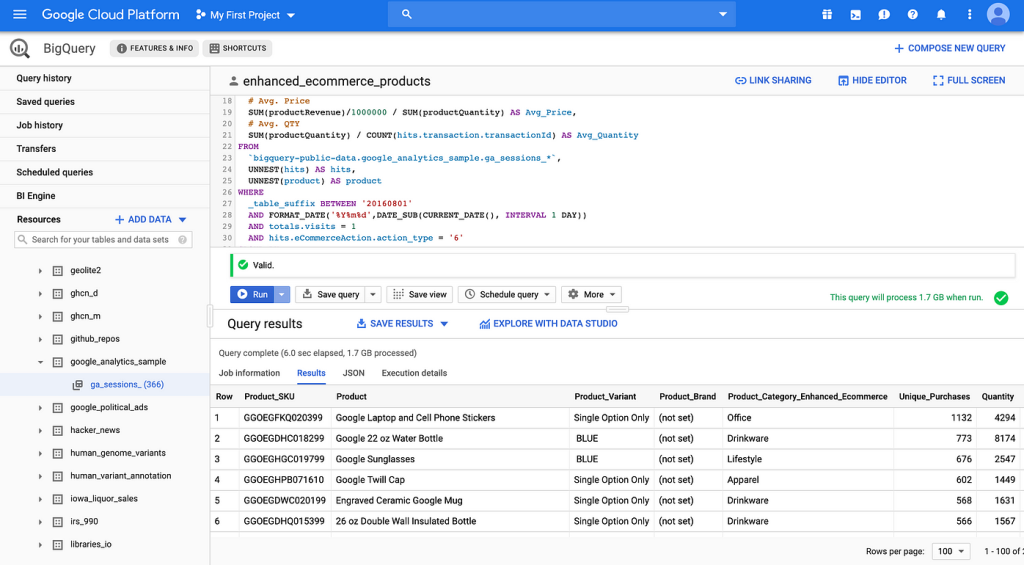

3. Big Query: As Google’s serverless, multi-cloud data warehouse, Big Query empowers businesses to turn big data into actionable insights with unparalleled speed.

4. Apache Spark: Open-source Apache Spark is renowned for its in-memory processing, offering data engineers the ability to handle big data analytics and other complex tasks.

5. Redash: Embracing the power of open-source, Redash simplifies data exploration and visualization. Its ability to integrate with multiple data sources and provide real-time, dynamic dashboards makes it a favorite among data enthusiasts.

Comparison Table Of Top Data Engineering Tools

Now let’s take a look at the table below to give you an idea of our tools for data engineering —

Tools | Use Case | Data Sources | Integration | Ease of Use | Scalability |

| Data warehousing and analytics | AWS services, Third-party tools | Extensive AWS integrations | High | Excellent |

| Data integration and collection | 300+ integrations, including databases, SaaS platforms | Wide range of tech stack integrations | Moderate | Very High |

| Real-time analytics and data warehousing | Google services, External tools | Broad GCP ecosystem integrations | High | Excellent |

| Big data processing and distributed analytics | Hadoop Distributed File System (HDFS), Local file systems, others | Multiple libraries & languages support | Moderate | Excellent |

| Data exploration and visualization | SQL, NoSQL, Big data sources, cloud databases | RESTful API, Python, and JavaScript integrations | High | High |

What are the Best Data Engineering Tools?

The best data engineering tools are software solutions that enable data engineers to efficiently design, manage, and orchestrate data workflows. These tools form the backbone of modern data architectures, handling everything from data extraction to transformation, loading, and more.

Spotlight on Data Engineering

At its core, data engineering focuses on the design and construction of systems and infrastructure for collecting, storing, and analyzing data. With the growing volume of data generated daily, there’s an increasing demand for professionals skilled in this domain.

Essential Tools for the Data Engineer

While there are numerous tools available, some have gained popularity due to their robust features, scalability, and adaptability to different data environments. Tools such as Amazon Redshift and Apache Spark, for instance, have become industry staples.

Database Management and Beyond

But beyond databases, these tools also provide features for ETL processes, real-time data streaming, and batch processing. Thus, these tools make them indispensable for many data projects.

How Best Data Engineering Tools Can Help Your Software Development or Testing

It is important for you to understand how these tools can be used to develop and test software.

Streamlining the Data Engineering Lifecycle

From the initial stages of data ingestion to its final visualization, the right tools can significantly cut down on the time and resources required. They help automate many tasks that would otherwise be manual, reducing room for error.

Enhancing Data Teams’ Productivity

For data teams, having access to these tools means they can collaborate more efficiently, with predefined workflows and templates that ensure consistency across projects.

Integration with Current Projects

Tools for data engineering often come with integrations for popular software development tools and platforms. Along with that to ensure the consistent quality of software, it’s vital to adopt a standardized testing process.

So, they make it easier for developers to incorporate data into their applications.

Why Best Data Engineering Tools Are Important to Your Software Testing

Let’s take a moment to understand why these tools are so important –

Data-Driven Testing Decisions

For software testing, access to accurate and timely data is crucial. The right engineering tool can provide testers with the data they need, in the format they require, to make informed testing decisions.

Ensuring Data Integrity Throughout

A robust data engineering tool will ensure that the data remains consistent, reliable, and trustworthy throughout the engineering lifecycle. There is no doubt that this is especially important during the testing phase.

Empowering Data Projects with Quality Assurance

Lastly, in the era of data-driven decision making, ensuring the quality of data has never been more critical. The right tools not only help in processing and managing data but also play a pivotal role in ensuring its accuracy and reliability, leading to more successful data projects.

Besides, the successful delivery of a project is a collective effort, with the test team playing a pivotal role in ensuring quality

Best Data Engineering Tools

Let’s take a closer look at each tool so you’ll have a better understanding of it –

1. Amazon Redshift

Modern companies are constantly looking for data warehousing solutions that can handle large datasets without sacrificing speed or efficiency. Enter Amazon Redshift. It’s a cloud-based data warehousing service from Amazon Web Services (AWS) for online analytic processing (OLAP).

It provides a secure and scalable environment to manage and analyze data. Recognized in the list of data engineering tools, Redshift stands out for its user-friendly interface, advanced query performance, and integration capabilities.

Whether you’re a startup or an established enterprise, Redshift’s rich toolset ensures businesses can extract actionable insights from their data with ease.

About Amazon Redshift

- Employee Numbers: Over 1 million (Amazon as a whole)

- Founding Team: Developed by Amazon Web Services (AWS), an initiative under the leadership of Andrew Jassy.

Key Features

It’s got some remarkable features to make it stand out from the crowd and they’re —

Scalability On Demand

Redshift offers flexibility in data storage and compute capacity. Users can start with just a small dataset and expand to petabyte-scale as their data grows, ensuring no wastage of resources. This kind of scalability is a boon for growing businesses, ensuring they don’t have to constantly switch platforms.

Advanced Query Performance

Utilizing techniques like columnar storage, data compression, and parallel processing, Redshift delivers fast performance for complex queries. Over time, its machine learning capabilities optimize queries based on past patterns, further enhancing the speed and efficiency of data retrievals.

Robust Security Measures

Safety is paramount in data warehousing. Redshift offers robust security measures, which are subjected to verification testing, for data both in transit and at rest. Combined with AWS Identity and Access Management (IAM), users can easily control who accesses the data, providing multi-layered security.

Integration with AWS Services

As a pivotal part of the AWS ecosystem, Redshift offers seamless integration with various AWS services. This ensures users have a holistic data engineering tools and technologies experience, making data management, analysis, and storage an efficient process.

Cost Management

Cost-effectiveness is where Redshift shines. With features that rival even the best data engineering tools free of charge, Redshift offers a pay-as-you-go model, ensuring businesses only pay for the storage and computation they utilize.

Pros of using Amazon Redshift

- Exceptional scalability matching business growth.

- Strong data encryption and security protocols.

- Simplified integration within the AWS ecosystem.

- Predictable and flexible pricing model.

Cons of using Amazon Redshift

- Can be overwhelming for beginners unfamiliar with AWS.

- Occasional complexities in custom query optimization.

Pricing

- RA3.4xlarge: $1.22 – $3.26 Per hour

- RA3.16xlarge: $8.61 – $13.04 Per hour

- Dc2.large: $0.094 – $0.25 Per hour

- Dc2.8xlarge: $1.50 – $4.80 Per hour

Customer Ratings

- G2: 4.3 based on 373 reviews

Our Review of Amazon Redshift

Amazon Redshift emerges as a formidable player in the data warehousing landscape. Its emphasis on scalability, performance, and security makes it a preferred choice for businesses of all sizes.

While there’s an abundance of open-source data engineering tools in the market, the managed service and constant optimization Redshift offers is unparalleled. However, the integration depth with AWS means new users might require some time and training to extract its full potential.

If you’re in search of a comprehensive, cost-effective, and high-performing data warehousing solution, Redshift deserves serious consideration.

2. Segment

Having a centralized platform to aggregate and manage customer data is crucial to successful marketing and product optimization in today’s world. Segment, at its core, is a customer data platform that facilitates this by allowing businesses to collect, clean, and control their customer data.

It streamlines data collection from multiple sources and channels, allowing companies to maintain a single, unified view of their customer. This not only optimizes personalized customer experiences but also paves the way for effective marketing strategies.

With Segment, businesses can harness the benefits of accurate, real-time data, ensuring that every decision is backed by actionable insights.

About Segment

- Employee Numbers: Over 500

- Founding Team: Peter Reinhardt, Calvin French-Owen, Ian Storm Taylor, and Ilya Volodarsky.

Key Features

A few of its features differentiate it from the rest, including —

Unified Customer View

Segment creates a comprehensive profile of every customer by pulling data from multiple sources. This unified view means businesses no longer need to hop between tools to get a full picture, enhancing efficiency and accuracy.

Protocols (Data Quality Control)

Segment’s Protocols ensure that your data adheres to a set standard. It validates data before it’s sent to your tools, thereby maintaining the integrity and quality of the information. This eliminates discrepancies and ensures uniformity.

Real-time Data Sync

In a world where real-time responses are crucial, Segment ensures that your data is synced in real-time across all connected tools. This guarantees that all platforms have the most current and accurate customer information.

Privacy and Compliance Tools

Segment ensures that businesses stay compliant with data privacy regulations. Its suite of privacy tools enables companies to manage customer consent, handle deletion and access requests, and stay compliant with regulations like GDPR and CCPA.

Extensive Integration Options

Segment offers integrations with over 300 tools and platforms. This broad range means businesses can push and pull data to and from a plethora of third-party applications, be it analytics, marketing automation, or CRM tools.

Pros of using Segment

- Centralized data management offers a 360-degree customer view.

- Assured data quality with validation checks.

- Real-time data synchronization across platforms.

- Strong commitment to data privacy and compliance.

- Extensive integration options with popular third-party tools.

Cons of using Segment

- Might be overwhelming for smaller businesses.

- Pricing can be on the higher side for premium features.

- Requires some initial setup and familiarization.

Pricing

- Free: $0

- Team: Prices start at $120 per month

- Business: Need to contact

Customer Ratings

- G2: 4.6 based on 512 reviews

Our Review of Segment

Segment is undeniably a powerhouse in the realm of customer data platforms. Its capability to unify data from various sources into one accessible platform makes it indispensable for medium to large enterprises.

The emphasis on data quality and compliance ensures that businesses not only gather insights but do so responsibly. The only caveats are its pricing and the learning curve for beginners.

Any business that is interested in better understanding its customers can make good use of Segment’s actionable insights and efficient data management.

3. Big Query

In today’s data-centric environment, companies are in constant search of tools that can handle massive datasets without a hitch. Google’s BigQuery stands out as a premier solution in the list of data engineering tools.

As a fully managed, serverless data warehouse, BigQuery provides lightning-fast SQL analytics across large datasets. The tool’s key allure lies in its ability to run complex queries swiftly, thereby ensuring timely insights for businesses.

It’s especially beneficial for those who already are engaged in Google’s ecosystem, as it flawlessly integrates with other Google Cloud services. For businesses of all sizes, BigQuery’s ability to analyze terabytes of data in seconds and its pay-as-you-go model make it an attractive option.

About Big Query

- Employee Numbers: BigQuery is a product by Google Cloud, which employs thousands.

- Founding Team: BigQuery was developed and launched by Google’s internal team.

Key Features

There are a few notable features including the following —

Serverless Architecture

BigQuery operates on a serverless model which means businesses don’t have to worry about infrastructure management. You only focus on analyzing data without the overhead of managing the underlying systems.

High-Speed Analysis

Leveraging the power of Google’s infrastructure, BigQuery can run SQL-like queries against multi-terabyte datasets in mere seconds. This ensures that data insights are always timely and actionable.

Real-time Analytics

BigQuery’s streaming capability lets businesses ingest up to 100,000 rows of data per second. This ensures real-time analytics and insights, enabling quick decision-making processes.

Machine Learning Integration

One of its distinguishing features is its seamless integration with Google’s machine learning tools. With BigQuery ML, users can build and deploy machine learning models directly within the tool.

Data Security

As part of the Google Cloud, BigQuery offers robust security features. Some of them include encryption at rest and in transit, identity management, and private network connectivity.

Pros of using Big Query

- Serverless nature reduces overhead costs.

- High-speed analysis for large datasets.

- Direct integration with Google’s machine learning tools.

- Robust security measures ensure data integrity.

- Comprehensive toolset for diverse analytics needs.

Cons of using Big Query

- Costs can ramp up with increased data queries.

- Limited integrations outside of the Google ecosystem.

Pricing

- They offer a free tier, but more extensive operations fall under their premium pricing.

Customer Ratings

- G2: 4.5 based on 583 reviews

Our Review of BigQuery

BigQuery, without a doubt, is among the best data engineering tools free tiers available. It promises speed, scalability, and the trustworthiness of the Google Cloud platform.

Its serverless nature makes infrastructure management a thing of the past, but its machine learning integration gives it an edge. New users might need some time to familiarize themselves with its workings, and costs can be a concern if not monitored.

Yet, for businesses ready to invest in top-notch data analytics, BigQuery is hard to overlook.

4. Apache Spark

In the universe of big data analytics, Apache Spark is a luminous star. Part of the best data engineering tools, it has been a preferred choice for organizations striving for real-time analytics and swift data processing.

With its in-memory data processing ability, Spark effortlessly handles large datasets, making it indispensable for businesses aiming for timely insights. Known for its flexibility, it can run in various environments, be it standalone, cloud, or with popular cluster managers like Mesos and YARN.

Notably, as one of the open-source data engineering tools, Spark has a vast community that continually refines and enhances its capabilities. This makes it an ideal solution for businesses seeking robust, reliable, and evolving data engineering solutions.

About Product

- Employee Numbers: Apache Spark is a community-driven project with countless contributors worldwide.

- Founding Team: Developed at UC Berkeley’s AMPLab by Matei Zaharia in 2009 and later donated to the Apache Software Foundation.

Key Features

A few of the features that distinguish this product from others are as follows —

In-Memory Processing

Apache Spark’s distinguishing feature is its in-memory processing, which significantly speeds up analytic applications by storing data in the RAM of the servers.

Versatile Data Integration

Apache Spark seamlessly integrates with a variety of data sources. Some of them are HDFS, Cassandra, and even cloud-based storage systems, ensuring data ingestion without a hitch.

Advanced Analytics

Beyond simple data processing, Spark boasts built-in libraries for advanced analytics tasks. From machine learning (Spark MLlib) to graph processing (GraphX), it covers a broad spectrum of analytical tools.

Scalability and Fault Tolerance

Built on the cluster-computing framework, Spark ensures high scalability. It can quickly distribute data and computations across clusters, and its fault tolerance ensures data integrity.

Language Flexibility

Developers can use Spark with various programming languages, including Java, Scala, Python, and R, facilitating a broad user base.

Pros of using Apache Spark

- Lightning-fast in-memory data processing.

- Extensive integration capabilities with various data sources.

- Comprehensive built-in libraries for diverse analytics.

- Robust scalability and fault tolerance.

- Multiple language support caters to a wide array of developers

.

Cons of using Apache Spark

- Requires a good amount of RAM for optimal performance.

- Steeper learning curve for beginners.

- Memory management can be challenging in extensive applications.

Pricing

- Being among the best data engineering tools free of charge, Apache Spark is open-source.

Customer Ratings

- G2: 4.1 based on 39 reviews

Our Review of Apache Spark

Apache Spark stands tall in the galaxy of best data engineering tools. Its ability to process vast amounts of data swiftly, combined with advanced analytics and wide language support, makes it invaluable for businesses.

The open-source nature ensures it remains up-to-date and evolves with the ever-changing data landscape. While there are some challenges with memory management and the learning curve, the advantages heavily outweigh the drawbacks.

For businesses eyeing comprehensive data solutions, Apache Spark remains a formidable choice.

5. Redash

In today’s data-driven environment, making data accessible and user-friendly is paramount. Here’s where Redash comes in, cementing its position among the top-tier visualization tools.

It’s a querying and visualization tool that aims to simplify how you explore and visualize your data. Hailing from the realm of open-source data engineering tools, Redash offers the flexibility, adaptability, and community-driven features that tech aficionados cherish.

Redash’s data visualization capabilities, as well as its ability to connect to multiple data sources, democratize data access across teams, enable businesses to leverage it. The resulting dashboards, charts, and graphs empower teams to derive insights and drive data-informed decisions swiftly.

About Redash

- Employee Numbers: 1 to 25

- Founding Team: Founded by Arik Fraimovich as a solution to handle the challenges of data exploration and visualization.

Key Features

The following features make this product unique —

Diverse Data Source Integration

Redash stands out with its ability to integrate seamlessly with numerous data sources, from PostgreSQL to BigQuery, ensuring versatile data exploration.

Intuitive Query Editor

It boasts an intuitive SQL query editor equipped with schema browser and auto-complete features, simplifying the data querying process even for novices.

Dynamic Dashboards

Users can craft dynamic, real-time dashboards that visualize vast data sets efficiently, offering a consolidated view of the data landscape.

Alert System

The platform’s robust alert system notifies users about anomalies or essential data changes, ensuring timely interventions and informed decisions.

Collaboration Features

Redash fosters team collaboration by allowing dashboard sharing, annotations, and comments, ensuring that insights are collectively derived and discussed.

Pros of using Redash

- Open-source nature offers flexibility and community-driven updates.

- Supports a wide array of data sources.

- User-friendly interface with an efficient query editor.

- Real-time, dynamic dashboard creation.

- In-built collaboration tools to enhance team synergy.

Cons of using Redash

- Initial setup might be challenging for those unfamiliar with open-source data engineering tools.

- Might require some SQL knowledge for intricate queries.

Pricing

- Redash is available as an open-source tool, making it free for users.

Customer Ratings

G2:

Our Review of Redash

Redash emerges as a compelling choice for businesses desiring a straightforward, yet powerful data visualization tool. Its open-source nature ensures adaptability and a constant influx of community-driven enhancements.

The ease with which it connects to various data sources and the efficiency of its query editor makes it user-friendly, even for those less tech-savvy. Its strengths in data integration, dashboard creation, and collaboration make it a worthy competitor in the data engineering landscape.

For businesses, especially those on a tight budget, Redash offers incredible value, blending functionality with cost-effectiveness.

Getting the Most Out of Best Data Engineering Tools

Maximizing the utility of the best data engineering tools involves a keen understanding of both the tools and the data at hand. Here’s how to do it right:

- Up-to-Date Training: Ensure your team is well-trained and keeps up with the latest functionalities and updates.

- Scalability: Choose tools that allow for scalability to accommodate future growth.

- Data Compliance: Always adhere to data compliance and privacy regulations to secure sensitive information.

- Integration Capabilities: Leverage the tool’s integration capabilities to create a cohesive data ecosystem.

- Automated Workflows: Set up automated workflows to streamline repetitive tasks and boost efficiency.

- Monitoring and Maintenance: Regularly monitor the system and perform necessary maintenance to ensure optimal performance.

- Collaborative Environment: Foster a collaborative environment where team members can share insights and work together seamlessly.

- Feedback and Iterations: Establish a feedback loop for continuous improvements and iterative developments.

Wrapping Up

From streamlining database management to ensuring seamless data flow during software testing, data engineering encompasses a wide range of tools. As we’ve explored, the best data engineering tools offer capabilities that go beyond mere data processing.

These tools are not merely software; they are enablers, bridging the gap between raw data and actionable insights. They simplify complexities, enhance efficiencies, and foster a culture of data-driven decision-making. They empower organizations to make informed decisions, enhance productivity, and ensure data integrity throughout various projects.

In the digital age, where data-driven strategies dominate, investing in the right tools is not just advisable; it’s imperative. If you are a business leader, a data engineer, or a software developer, understanding and leveraging these tools will unlock unparalleled growth and efficiency.

Frequently Asked Questions

Are all data engineering tools expensive?

No, while some are priced premium, there are many open-source data engineering tools that are free and offer robust capabilities.

Can I use these tools even if I’m not a data engineer?

Absolutely! Many tools are user-friendly and come with comprehensive documentation to help even those with minimal data experience.

How do these tools integrate with existing software applications?

Most top-tier data engineering tools offer APIs and integration options with popular software platforms, ensuring smooth interoperability.

- 5 Best DevOps Platform and Their Detailed Guide For 2024 - December 26, 2025

- Top 10 Cross Browser Testing Tools: The Best Choices for 2024 - October 28, 2025

- 5 Best API Testing Tools: Your Ultimate Guide for 2024 - October 26, 2025